Tuesday 2 May 2023

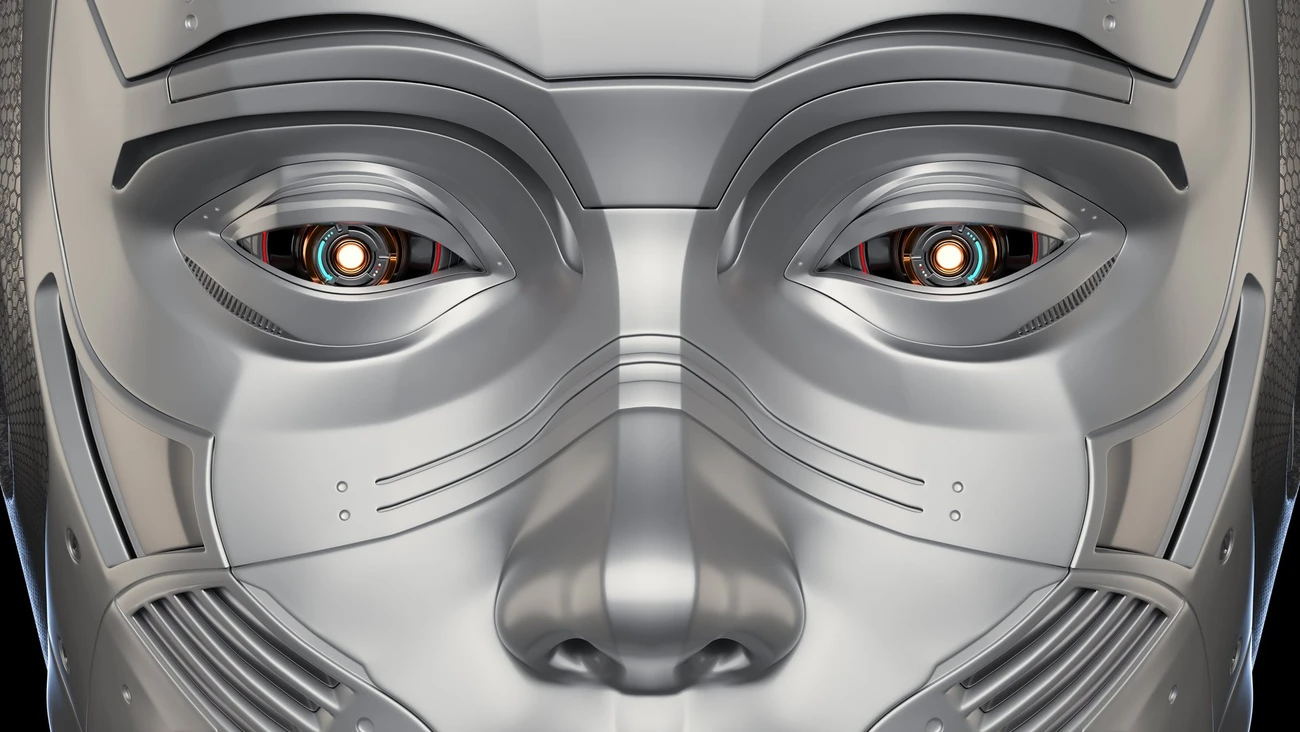

Does ChatGPT spell the end of humanity?

ChatGPT, Midjourney, Stable Diffusion

These names are igniting debate. Some are calling for a moratorium, while others warn of a hundred million job losses. At the heart of the debate is artificial intelligence. Or more specifically "generative AIs", as it seems obvious that we are not going to eliminate the focusing functions of smartphone cameras that rely on AIs or all the assistants that we use on a daily basis. But it is clear that we are entering a new era.

At the heart of the controversy is ChatGPT, a human-programmed technological prodigy which, at a person's request, can write texts, coach them, generate ideas, and so on. And all this using a gigantic training database containing several billion items of content.

This technology is not new, given that the building blocks for it were invented many years ago, notably by Google. What makes it controversial is that it has recently become available to everyone and that it can generate good quality texts in one minute.

This rather magical aspect of it fascinates some, who see it as an incredible tool for making everyday tasks easier, and frightens others, who foresee the possible end of humanity through the proliferation of automatically-generated fake news and the destruction of millions of jobs.

In order to analyse and study the effects of this technology, on the generation of both texts and images, we conducted a concrete experiment with dozens of professionals and students from the IIM Digital School, the Devinci Executive Education MBA in artificial intelligence and researchers from our Talan Group research centre.

The aim of the experiment

Was to carry out professional projects over four days. One day was devoted to training students in how these tools work and alerting them to the many errors generated by generative AIs. Most of the participants did not have daily experience of using these tools. They then had to carry out their ambitious project over the remaining three days of intensive work.

We were able to measure concretely the spectacular results of the use of these tools. Not in the potential replacement of these users in their jobs, but in the increase of their creative abilities and productivity. The feedback was that the overwhelming majority of participants said that it was necessary to be trained in the use of these tools in order to take full advantage of them.

Other recent experiences show that it is not enough to access generative AIs to use them effectively. Many employees report that unfamiliarity with such tools leads to real disappointment, which can result in frustration and even giving up on them altogether.

Nevertheless, if we go beyond the automatic generation of texts, the particular case of the automatic generation of images, and soon of videos, shows that the controversy about the role that these tools can play can go viral. Recent and iconic examples such as the fake images of a bleeding person surrounded by "forces of law and order" that were widely circulated on social media at the height of the demonstrations about pensions in France, or the pictures of the French president in different positions, are the subject of intense debate.

Fake images are not new

However, as they have been used for years in wars to influence each side, and by extremist and conspiracy groups.

Yet the call for a moratorium flies in the face of history and is, in practice, impossible to enforce. Indeed, while the most widely used ones are available online with a publisher who oversees them, some generative AIs are installed on individual computers and are therefore impossible to control. But the alarm sounded is salutary.

We must promote the responsible deployment of these tools, which must be at the service of human beings and enable them to be more productive in their daily work, as in the example of the experiment referred to above. The solution to this problem does not lie in a ban, but rather in high-quality training, explaining how it works to as many people as possible, and a call that is meaningful: let’s campaign for greater transparency of algorithms and the data used to train them.

This is also in line with Italy's desire to better regulate these platforms with the concept of GDPR, to understand the data with which these AIs have been trained, and to ensure access for vulnerable populations.

Finally, let's remember that the regularly predicted job losses never materialise. In 2019, 120 million job losses were predicted over three years – now is it 300 million that are about to be destroyed? Stop the carnage.

Jobs and societies evolve in accordance with the technologies of each age. The training and command of these tools, which must be at the service of human beings, enable an increase in human intelligence, which is not about to be replaced by artificial intelligence.

Related topics